NPTEL Deep Learning – IIT Ropar Week 11 Assignment Answers 2025

1. For which of the following problems are RNNs suitable?

- Generating a description from a given image.

- Forecasting the weather for the next N days based on historical weather data.

- Converting a speech waveform into text.

- Identifying all objects in a given image.

Answer :- For Answers Click Here

2. Suppose that we need to develop an RNN model for sentiment classification. The input to the model is a sentence composed of five words and the output is the sentiments (positive or negative). Assume that each word is represented as a vector of length 100×1 and the output labels are one-hot encoded. Further, the state vector st is initialized with all zeros of size 30×1. How many parameters (including bias) are there in the network?

Answer :-

3. Select the correct statements about GRUs

- GRUs have fewer parameters compared to LSTMs

- GRUs use a single gate to control both input and forget mechanisms

- GRUs are less effective than LSTMs in handling long-term dependencies

- GRUs are a type of feedforward neural network

Answer :-

4. What is the main advantage of using GRUs over traditional RNNs?

- They are simpler to implement

- They solve the vanishing gradient problem

- They require less computational power

- They can handle non-sequential data

Answer :-

5. The statement that LSTM and GRU solves both the problem of vanishing and exploding gradients in RNN is

- True

- False

Answer :- For Answers Click Here

6. What is the vanishing gradient problem in training RNNs?

- The weights of the network converge to zero during training

- The gradients used for weight updates become too large

- The network becomes overfit to the training data

- The gradients used for weight updates become too small

Answer :-

7. What is the role of the forget gate in an LSTM network?

- To determine how much of the current input should be added to the cell state.

- To determine how much of the previous time step’s cell state should be retained.

- To determine how much of the current cell state should be output.

- To determine how much of the current input should be output.

Answer :-

8. How does LSTM prevent the problem of vanishing gradients?

- Different activation functions, such as ReLU, are used instead of sigmoid in LSTM.

- Gradients are normalized during backpropagation.

- The learning rate is increased in LSTM.

- Forget gates regulate the flow of gradients during backpropagation.

Answer :-

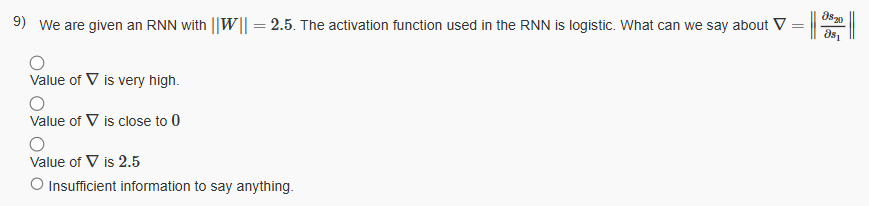

9.

Answer :-

10. Select the true statements about BPTT?

- The gradients of Loss with respect to parameters are added across time steps

- The gradients of Loss with respect to parameters are subtracted across time steps

- The gradient may vanish or explode, in general,if timesteps are too large

- The gradient may vanish or explode if timesteps are too small

Answer :- For Answers Click Here