NPTEL Introduction to Machine Learning Week 3 Assignment Answers 2025

1. Which of the following statement(s) about decision boundaries and discriminant functions of classifiers is/are true?

- In a binary classification problem, all points x on the decision boundary satisfy δ1(x)=δ2(x).

- In a three-class classification problem, all points on the decision boundary satisfy δ1(x)=δ2(x)=δ3(x).

- In a three-class classification problem, all points on the decision boundary satisfy at least one of δ1(x)=δ2(x),δ2(x)=δ3(x)orδ3(x)=δ1(x).

- If x does not lie on the decision boundary then all points lying in a sufficiently small neighbourhood around x belong to the same class.

Answer :- For Answers Click Here

2. You train an LDA classifier on a dataset with 2 classes. The decision boundary is significantly different from the one obtained by logistic regression. What could be the reason?

- The underlying data distribution is Gaussian

- The two classes have equal covariance matrices

- The underlying data distribution is not Gaussian

- The two classes have unequal covariance matrices

Answer :- For Answers Click Here

3. The following table gives the binary ground truth labels yi for four input points xi (not given). We have a logistic regression model with some parameter values that computes the probability p1(xi) that the label is 1. Compute the likelihood of observing the data given these model parameters

- 0.072

- 0.144

- 0.288

- 0.002

Answer :-

4. Which of the following statement(s) about logistic regression is/are true?

- It learns a model for the probability distribution of the data points in each class.

- The output of a linear model is transformed to the range (0, 1) by a sigmoid function.

- The parameters are learned by minimizing the mean-squared loss.

- The parameters are learned by maximizing the log-likelihood.

Answer :-

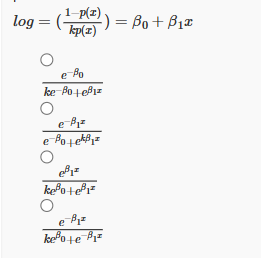

5. Consider a modified form of logistic regression given below where k is a positive constant and β0 and β1 are parameters.

Answer :-

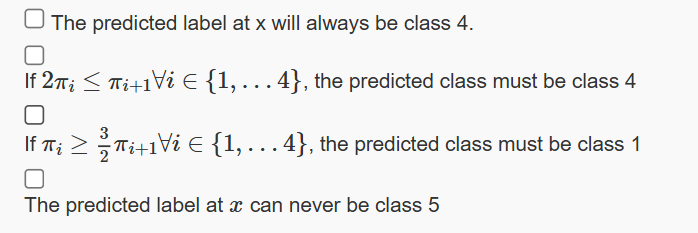

6. Consider a Bayesian classifier for a 5-class classification problem. The following tables give the class-conditioned density fk(x) for class k∈{1,2,…5} at some point x in the input space.

Let πk denotes the prior probability of class k. Which of the following statement(s) about the predicted label at x is/are true? (One or more choices may be correct.)

Answer :- For Answers Click Here

7. Which of the following statement(s) about a two-class LDA classification model is/are true?

- On the decision boundary, the prior probabilities corresponding to both classes must be equal.

- On the decision boundary, the posterior probabilities corresponding to both classes must be equal.

- On the decision boundary, class-conditioned probability densities corresponding to both classes must be equal.

- On the decision boundary, the class-conditioned probability densities corresponding to both classes may or may not be equal.

Answer :-

8. Consider the following two datasets and two LDA classifier models trained respectively on these datasets.

Dataset A: 200 samples of class 0; 50 samples of class 1

Dataset B: 200 samples of class 0 (same as Dataset A); 100 samples of class 1 created by repeating twice the class 1 samples from Dataset A

Let the classifier decision boundary learnt be of the form wTx+b=0 where, w is the slope and b

is the intercept. Which of the given statement is true?

- The learned decision boundary will be the same for both models.

- The two models will have the same slope but different intercepts.

- The two models will have different slopes but the same intercept.

- The two models may have different slopes and different intercepts

Answer :-

9. Which of the following statement(s) about LDA is/are true?

- It minimizes the inter-class variance relative to the intra-class variance.

- It maximizes the inter-class variance relative to the intra-class variance.

- Maximizing the Fisher information results in the same direction of the separating hyperplane as the one obtained by equating the posterior probabilities of classes.

- Maximizing the Fisher information results in a different direction of the separating hyperplane from the one obtained by equating the posterior probabilities of classes.

Answer :-

10. Which of the following statement(s) regarding logistic regression and LDA is/are true for a binary classification problem?

- For any classification dataset, both algorithms learn the same decision boundary.

- Adding a few outliers to the dataset is likely to cause a larger change in the decision boundary of LDA compared to that of logistic regression.

- Adding a few outliers to the dataset is likely to cause a similar change in the decision boundaries of both classifiers.

- If the intra-class distributions deviate significantly from the Gaussian distribution, logistic regression is likely to perform better than LDA.

Answer :- For Answers Click Here