NPTEL Data Mining Week 3 Assignment Answers 2025

1. Decision tree is an algorithm for:

A. Classification

B. Clustering

C. Association rule mining

D. Noise filtering

Answer :- For Answers Click Here

2. Leaf nodes of a decision tree correspond to:

A. Attributes

B. Classes

C. Data instances

D. None of the above

Answer :-

3. Non-leaf nodes of a decision tree correspond to:

A. Attributes

B. Classes

C. Data instances

D. None of the above

Answer :-

4. Which of the following criteria is used to decide which attribute to split next ina decision tree:

A. Support

B. Confidence

C. Entropy

D. Scatter

Answer :-

5. If we convert a decision tree to a set of logical rules, then:

A. the internal nodes in a branch are connected by AND and the branches by AND

B. the internal nodes in a branch are connected by OR and the branches by OR

C. the internal nodes in a branch are connected by AND and the branches by OR

D. the internal nodes in a branch are connected by OR and the branches by AND

Answer :-

6. The purpose of pruning a decision tree is:

A. improving training set classification accuracy

B. improving generalization performance

C. dimensionality reduction

D. tree balancing

Answer :- For Answers Click Here

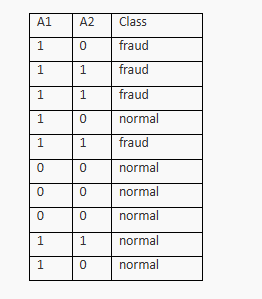

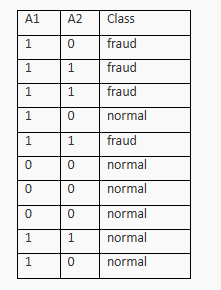

7. Given the following training set for classification problem into two classes “fraud” and “normal”. There are two attributes A1 and A2 taking values 0 or 1. Splitting on which attribute in the root of a decision tree will lead to highest information gain?

A. A1

B. A2

C. There will be a tie among the attributes

D. Not enough information to decide

Answer :-

8.

A. 0

B. –(4/10)xlog(4/10)-(6/10)xlog(6/10)

C. –log(4/10)-log(6/10)

D. 1

Answer :-

9. Given the following training set for classification problem into two classes “fraud” and “normal”. There are two attributes A1 and A2 taking values 0 or 1. Splitting on attribute A1 in the root leads to an entropy reduction of?

A. –log(4/10)-log(6/10)

B. –(4/10)xlog(4/10)-(6/10)xlog(6/10) + (4/7)xlog(4/7) + (3/7)xlog(3/7)

C. –(4/10)xlog(4/10)-(6/10)xlog(6/10) + (4/7)xlog(4/7) + (3/7)xlog(3/7) + 1

D. 1

Answer :-

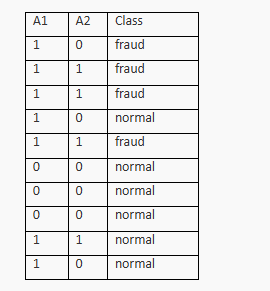

10. Given the following training set for classification problem into two classes “fraud” and “normal”. There are two attributes A1 and A2 taking values 0 or 1. Splitting on attribute A2 in the root leads to an entropy reduction of?

A. –log(4/10)-log(6/10)

B. –(4/10)xlog(4/10)-(6/10)xlog(6/10) + (1/4)xlog(1/4) + (3/7)xlog(3/7)

C. –(4/10)xlog(4/10)-(6/10)xlog(6/10) + (3/4)xlog(3/4) + (1/4)xlog(1/4) + (1/6)xlog(1/6) +(5/6)xlog(5/6)

D. 1

Answer :-

11. Decision trees can be used for:

A. Classification only

B. Regression only

C. Both classification and regression

D. Neither of classification and regression

Answer :- For Answers Click Here