NPTEL Data Mining Week 4 Assignment Answers 2025

1. Maximum aposteriori classifier is also known as:

A. Decision tree classifier

B. Bayes classifier

C. Gaussian classifier

D. Maximum margin classifier

Answer :- For Answers Click Here

2. If we are provided with an infinite sized training set which of the following classifier will have the lowest error probability?

A. Decision tree

B. K- nearest neighbor classifier

C. Bayes classifier

D. Support vector machine

Answer :-

3. Let A be an example, and C be a class. The probability P(C|A) is known as:

A. Apriori probability

B. Aposteriori probability

C. Class conditional probability

D. None of the above

Answer :-

4. Let A be an example, and C be a class. The probability P(C) is known as:

A. Apriori probability

B. Aposteriori probability

C. Class conditional probability

D. None of the above

Answer :-

5. A bank classifies its customer into two classes “fraud” and “normal” based on their installment payment behavior. We know that the probability of a customer being being fraud is P(fraud) = 0.20, the probability of customer defaulting installment payment is P(default) = 0.40, and the probability that a fraud customer defaults in installment payment is P(default|fraud) = 0.80. What is the probability of a customer who defaults in payment being a fraud?

A. 0.80

B. 0.60

C. 0.40

D. 0.20

Answer :-

6. Consider two binary attributes X and Y. We know that the attributes are independent and probability P(X=1) = 0.6, and P(Y=0) = 0.4. What is the probability that both X and Yhave values 1?

A. 0.06

B. 0.16

C. 0.26

D. 0.36

Answer :- For Answers Click Here

7. Consider a binary classification problem with two classes C1 and C2. Class labels of ten other training set instances sorted in increasing order of their distance to an instance x is as follows: {C1, C2, C1, C2, C2, C2, C1, C2, C1, C2}. How will a K=7 nearest neighbor classifier classify x?

A. There will be a tie

B. C1

C. C2

D. Not enough information to classify

Answer :-

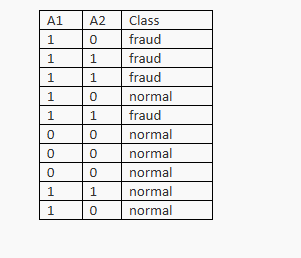

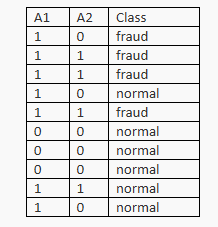

8. Given the following training set for classification problem into two classes “fraud” and “normal”. There are two attributes A1 and A2 taking values 0 or 1. What is the estimated apriori probability P(fraud)of the class fraud?

A. 0.2

B. 0.4

C. 0.6

D. 0.8

Answer :-

9. Given the following training set for classification problem into two classes “fraud” and “normal”. There are two attributes A1 and A2 taking values 0 or 1. What is the estimated class conditional probability P(A1=1, A2=1|fraud)?

A. 0.25

B. 0.50

C. 0.75

D. 1.00

Answer :-

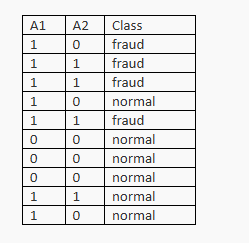

10. Given the following training set for classification problem into two classes “fraud” and “normal”. There are two attributes A1 and A2 taking values 0 or 1. The Bayes classifier classifies the instance (A1=1, A2=1) into class?

A. fraud

B. normal

C. there will be a tie

D. not enough information to classify

Answer :- For Answers Click Here