NPTEL Introduction to Machine Learning Week 4 Assignment Answers 2025

1. The Perceptron Learning Algorithm can always converge to a solution if the dataset is linearly separable.

- True

- False

- Depends on learning rate

- Depends on initial weights

Answer :- For Answers Click Here

2. Consider the 1 dimensional dataset:

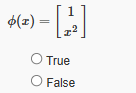

State true or false: The dataset becomes linearly separable after using basis expansion with

the following basis function

Answer :-

3. For a binary classification problem with the hinge loss function max(0,1−y(w⋅x)), which of the following statements is correct?

- The loss is zero only when the prediction is exactly equal to the true label

- The loss is zero when the prediction is correct and the margin is at least 1

- The loss is always positive

- The loss increases linearly with the distance from the decision boundary regardless of classification

Answer :-

4. For a dataset with n points in d dimensions, what is the maximum number of support vectors possible in a hard-margin SVM?

- 2

- d

- n/2

- n

Answer :-

5. In the context of soft-margin SVM, what happens to the number of support vectors as the parameter C increases?

- Generally increases

- Generally decreases

- Remains constant

- Changes unpredictably

Answer :- For Answers Click Here

6. Consider the following dataset:

Which of these is not a support vector when using a Support Vector Classifier with a polynomial kernel with degree = 3, C = 1, and gamma = 0.1?

(We recommend using sklearn to solve this question.)

- 2

- 1

- 9

- 10

Answer :-

7. Train a Linear perceptron classifier on the modified iris dataset. We recommend using sklearn. Use only the first two features for your model and report the best classification accuracy for l1 and l2 penalty terms.

- 0.91, 0.64

- 0.88, 0.71

- 0.71, 0.65

- 0.78, 0.64

Answer :-

8. Train a SVM classifier on the modified iris dataset. We recommend using sklearn. Use only the first three features. We encourage you to explore the impact of varying different hyperparameters of the model. Specifically try different kernels and the associated hyperparameters. As part of the assignment train models with the following set of hyperparameters RBF-kernel, gamma = 0.5, one-vs-rest classifier, no-feature-normalization.

Try C = 0.01, 1, 10. For the above set of hyperparameters, report the best classification accuracy.

- 0.98

- 0.88

- 0.99

- 0.92

Answer :- For Answers Click Here