NPTEL Introduction to Machine Learning Week 5 Assignment Answers 2025

1. Consider a feedforward neural network that performs regression on a p-dimensional input to produce a scalar output. It has m hidden layers and each of these layers has k hidden units. What is the total number of trainable parameters in the network? Ignore the bias terms.

- pk+mk2+k

- pk+(m−1)k2+k

- p2+(m−1)pk+k

- p2+(m−1)pk+k2

Answer :- For Answers Click Here

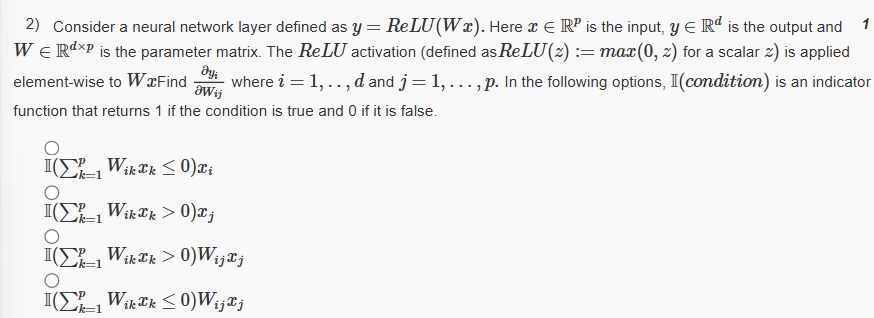

2.

Answer :-

3. Consider a two-layered neural network y=σ(W(B)σ(W(A)x)). Let h=σ(W(A)x) denote the hidden layer representation.W(A) and W(B) are arbitrary weights. Which of the following statement(s) is/are true? Note:∇g(f) denotes the gradient of f w.r.t g.

- ∇h(y) depends on W(A)

- ∇W(A)(y) depends on W(B)

- ∇W(A)(h) depends on W(B)

- ∇W(B)(y) depends on W(A)

Answer :-

4. Which of the following statement(s) about the initialization of neural network weights is/are true for a network that uses the sigmoid activation function?

- Two different initializations of the same network could converge to different minima

- For a given initialization, gradient descent will converge to the same minima irrespective of the learning rate.

- Initializing all weights to the same constant value leads to undesirable results

- Initializing all weights to very large values leads to undesirable results

Answer :-

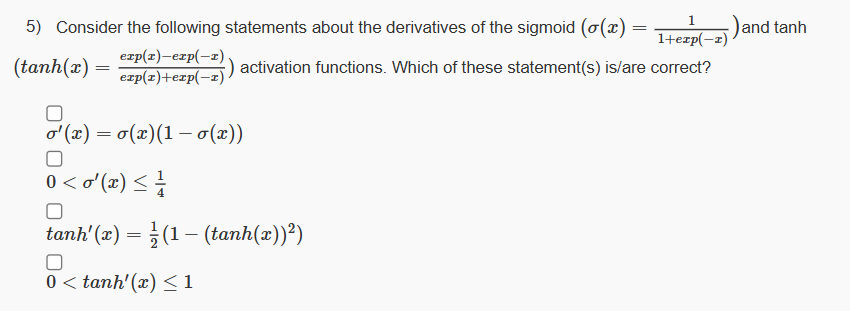

5.

Answer :-

6. A geometric distribution is defined by the p.m.f. f(x;p)=(1−p)(x−1)p for x=1,2,…… Given the samples [4, 5, 6, 5, 4, 3] drawn from this distribution, find the MLE of p.

- 0.111

- 0.222

- 0.333

- 0.444

Answer :- For Answers Click Here

7. Consider a Bernoulli distribution with p=0.7 (true value of the parameter). We draw samples from this distribution and compute an MAP estimate of p by assuming a prior distribution over p

. Let N(μ,σ2) denote a gaussian distribution with a mean μ and variance σ2 .Distributions are normalized as needed. Which of the following statement(s) is/are true?

- If the prior is N(0.6,0.1), we will likely require fewer samples for converging to the true value than if the prior is N(0.4,0.1)

- If the prior is N(0.4,0.1), we will likely require fewer samples for converging to the true value than if the prior is N(0.6,0.1)

- With a prior of N(0.1,0.001), the estimate will never converge to the true value, regardless of the number of samples used.

- With a prior of U(0,0.5) (i.e. uniform distribution between 0 and 0.5), the estimate will never converge to the true value, regardless of the number of samples used.

Answer :-

8. Which of the following statement(s) about parameter estimation techniques is/are true?

- To obtain a distribution over the predicted values for a new data point, we need to compute an integral over the parameter space.

- The MAP estimate of the parameter gives a point prediction for a new data point.

- The MLE of a parameter gives a distribution of predicted values for a new data point.

- We need a point estimate of the parameter to compute a distribution of the predicted values for a new data point.

Answer :-

9. In a classification setting, it is common in machine learning applications to minimize the discrete cross entropy loss given by HCE(p,q)=−Σipilogqi where pi and qi are the true and predicted distributions (pi∈{0,1} depending on the label of the corresponding entry). Which of the following statement(s) about minimizing the cross entropy loss is/are true?

- Minimizing HCE(p,q) is equivalent to minimizing the (self) entropy H(q)

- Minimizing HCE(p,q) is equivalent to minimizing HCE(q,p).

- Minimizing HCE(p,q) is equivalent to minimizing the KL divergence DKL(p||q)

- Minimizing HCE(p,q) is equivalent to minimizing the KL divergence DKL(q||p)

Answer :-

10. Which of the following statement(s) about activation functions is/are NOT true?

- Non-linearity of activation functions is not a necessary criterion when designing very deep neural networks

- Saturating non-linear activation functions (derivative →0 as x→±∞) avoid the vanishing gradients problem

- Using the ReLU activation function avoids all problems arising due to gradients being too small.

- The dead neurons problem in ReLU networks can be fixed using a leaky ReLU activation function

Answer :- For Answers Click Here