NPTEL Natural Language Processing Week 7 Assignment Answers 2025

1. Suppose you have a raw text corpus and you compute word co-occurrence matri› from there. Which of the following algorithms) can you utilize to learn word representations? (Choose all that apply)

a. CBOW

b. SVD

c. PCA

d. GloVe

Answer :- For Answers Click Here

2. What is the method for solving word analogy questions like, given A, B and D, find C such that A:B::C:D, using word vectors?

a. Vc= Va + (Vb – Vd), then use cosine similarity to find the closest word of Vc.

b. Vc = Va + (Vd – Vb) then do dictionary lookup for Vc

C. Vc = Vd + (Va – Vb) then use cosine similarity to find the closest word of Vc.

d. Vc= Vd + (Va – Vb) then do dictionary lookup for Vc.

e. None of the above

Answer :-

3. What is the value of PMI(W1, W2) for C(W1) = 100, C(W2) = 2500, C(W1, W2) = 320, N =

50000? N: Total number of documents.

C(wi): Number of documents, wi has appeared in.

C(Wi, wj): Number of documents where both the words have appeared in.

Note: Use base 2 in logarithm.

a. 4

b. 5

C. 6

d. 5.64

Answer :-

4. Given two binary word vectors wi and wz as follows:

W1 = [1010011010]

W2 = [0011111100]

Compute the Dice and Jaccard similarity between them.

a. 6/11, 3/8

b. 10/11, 5/6

C. 4/9, 217

d. 5/9, 5/8

Answer :-

5. Consider two probability distributions for two words be p and q. Compute their

similarity scores with KL-divergence.

p = [0.20, 0.75, 0.50]

q = [0.90, 0.10, 0.25)

Note: Use base 2 in logarithm.

a. 4.704, 1,720

b. 1.692, 0.553

c. 2.246, 1.412

d. 3.213, 2.426

Answer :-

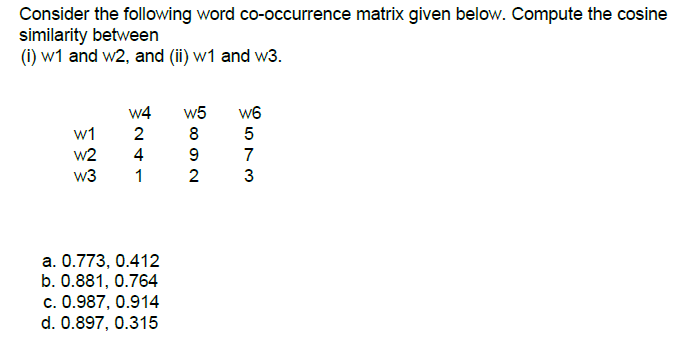

6.

Answer :- For Answers Click Here

7. Which of the following types of relations can be captured by word2vec (CBOW or

Skipgram)?

- Analogy (A:B::C:?)

- Antonymy

- Polysemy

- All of the above

Answer :-