NPTEL Reinforcement Learning Week 2 Assignment Answers 2025

1. Which of the following is true of the UCB algorithm?

- The action with the highest Q value is chosen at every iteration

- After a very large number of iterations, the confidence intervals of unselected actions will not change much

- The true expected-value of an action always lies within it’s estimated confidence interval.

- With a small probability ϵ, we select a random action to ensure adequate exploration of the action space.

Answer :- For Answers Click Here

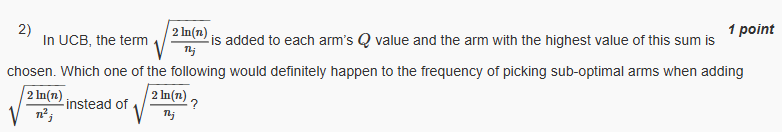

2.

- Sub-optimal arms would be chosen more frequently

- Sub-optimal arms would be chosen less frequently

- Makes no change to the frequency of picking sub-optimal arms.

- Sub-optimal arms could be chosen less or more frequently, depending on the samples.

Answer :-

3. In a 4-arm bandit problem, after executing 100 iterations of the UCB algorithm, the estimates of Q values are Q100(1)=1.73, Q100(2)=1.83, Q100(3)=1.89, Q100(4)=1.55 and the number of times each of them are sampled are- n1=25,n2=20, n3=30, n4=15. Which arm will be sampled in the next trial?

- Arm 1

- Arm 2

- Arm 3

- Arm 4

Answer :-

4. We need 8 rounds of median-elimination to get an (ϵ,δ)−PAC arm. Approximately how many samples would have been required using the naive (ϵ,δ)−PAC algorithm given (ϵ,δ)=(1/2,1/e) ? (Choose the value closest to the correct answer)

- 15000

- 10000

- 500

- 20000

Answer :-

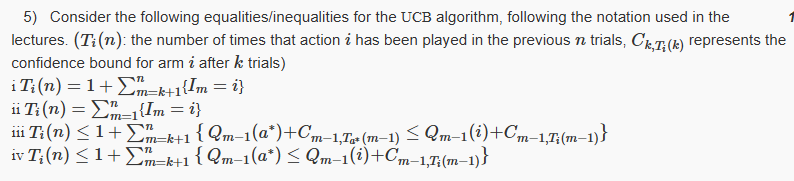

5.

Which of these equalities/inequalities are correct ?

- i and iii

- ii and iv

- i, ii, iii

- i, ii, iii, iv

Answer :-

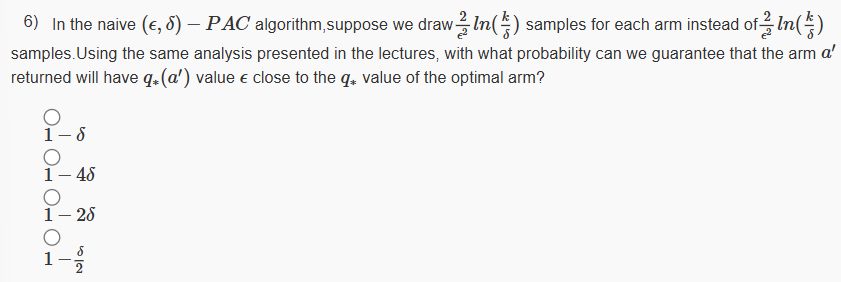

6.

Answer :- For Answers Click Here

7. In median elimination method for (ϵ,δ)−PAC bounds, we claim that for every phase l, Pr[A≤B+ϵl]>1−δl. (Sl – is the set of arms remaining in the lth phase)

Consider the following statements:

(i)A – is the maximum of rewards of true best arm in Sl, i.e. in lth phase

(ii)B – is the maximum of rewards of true best arm in Sl+1, i.e. in l+1th phase

(iii)B – is the minimum of rewards of true best arm in Sl+1, i.e. in l+1th phase

(iv)A – is the minimum of rewards of true best arm in Sl, i.e. in lth phase

(v)A – is the maximum of rewards of true best arm in Sl+1, i.e. in l+1th phase

(vi)B – is the maximum of rewards of true best arm in Sl, i.e. in lth phase

Which of the statements above are correct?

- i and ii

- iii and iv

- iii and iv

- v and vi

- i and iii

Answer :-

8. Which of the following statements is NOT true about Thompson Sampling or Posterior Sampling?

- After each sample is drawn, the q∗ distribution for that sampled arm is updated to be closer to the true distribution.

- Thompson sampling has been shown to generally give better regret bounds than UCB.

- In Thompson sampling, we do not need to eliminate arms each round to get good sample complexity

- The algorithm requires that we use Gaussian priors to represent distributions over q∗ values for each arm

Answer :-

9. Assertion: The confidence bound of each arm in the UCB algorithm cannot increase with iterations. Reason: The nj term in the denominator ensures that the confidence bound remains the same for unselected arms and decreases for the selected arm

- Assertion and Reason are both true and Reason is a correct explanation of Assertion

- Assertion and Reason are both true and Reason is not a correct explanation of Assertion

- Assertion is true and Reason is false

- Both Assertion and Reason are false

Answer :-

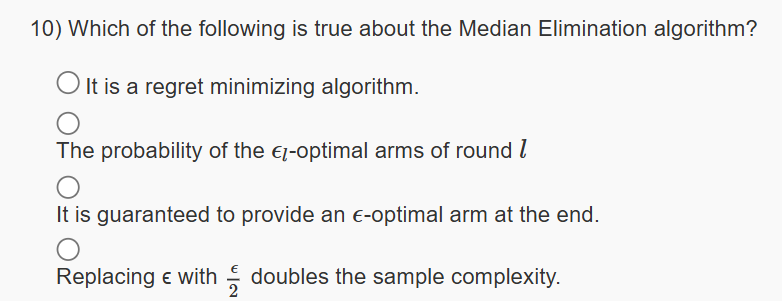

10.

Answer :- For Answers Click Here